* Please refer to the English Version as our Official Version.

* Please refer to the English Version as our Official Version.

Have you heard of Moravec's paradox? This paradox points out that for artificial intelligence (AI) systems, advanced reasoning requires very little computing power, while achieving the perception and movement skills that humans are accustomed to requires enormous computing resources. In essence, complex logical tasks are easier for AI compared to basic sensory tasks that human instincts can accomplish. This paradox highlights the difference between current AI and human cognitive abilities.

People are inherently multimodal. Each of us is like an intelligent terminal, usually needing to go to school for education and training, but the purpose and result of training and learning is that we have the ability to work and live independently, without always relying on external instructions and control.

We understand the world around us through various sensory modes such as vision, language, sound, touch, taste, and smell, and then assess the situation, analyze, reason, make decisions, and take action.

After years of sensor fusion and AI evolution, robots are now equipped with multimodal sensors. As we bring more computing power to edge devices such as robots, these devices are becoming increasingly intelligent. They can perceive their surroundings, understand and communicate in natural language, obtain touch through digital sensing interfaces, and perceive the robot's specific force, angular velocity, and even the magnetic field around the robot through a combination of accelerometers, gyroscopes, and magnetometers.

Entering a new era of robots and machine cognition

Before the emergence of Transformers and Large Language Models (LLMs), implementing multimodality in AI typically required the use of multiple separate models responsible for different types of data (text, images, audio), and the integration of different modalities through complex processes.

With the emergence of Transformer models and LLM, multimodality has become more integrated, allowing a single model to simultaneously process and understand multiple data types, resulting in AI systems with stronger comprehensive environmental perception capabilities. This transformation greatly improves the efficiency and effectiveness of multimodal AI applications.

Although LLMs such as GPT-3 are primarily text-based, the industry has made rapid progress towards multimodality. From OpenAI's CLIP and DALL · E, to now's Sora and GPT-4o, they are all model paradigms moving towards multimodal and more natural human-computer interaction. For example, CLIP can understand images paired with natural language, thereby bridging the gap between visual and textual information; DALL · E aims to generate images based on textual descriptions. We see that the Google Gemini model has also undergone a similar evolution.

In 2024, multimodal evolution will accelerate its development. In February of this year, OpenAI released Sora, which can generate realistic or imaginative videos based on textual descriptions. Upon careful consideration, this could provide a promising path for building a universal world simulator or become an important tool for training robots. Three months later, GPT-4o significantly improved the performance of human-computer interaction and was able to reason in real-time between audio, visual, and text. Comprehensively utilizing text, visual, and audio information to train a new model end-to-end, eliminating the two modal transformations from input modality to text and then from text to output modality, thereby significantly improving performance.

In the same week of February this year, Google released Gemini 1.5, significantly expanding the context length to 1 million tokens. This means that the 1.5 Pro can process a large amount of information at once, including one hour of video, 11 hours of audio, and a code repository containing over 30000 lines of code or 700000 words. Gemini 1.5 is built based on Google's leading research on Transformers and Hybrid Expert Architecture (MoE), and has open sourced 2B and 7B models that can be deployed on the edge side. At the Google I/O conference held in May, in addition to doubling the context length and releasing a series of generative AI tools and applications, Google also discussed the future vision of Project Astra, a universal AI assistant that can process multimodal information, understand the context in which users are located, and interact with people very naturally in conversations.

As the company behind open-source LLM Llama, Meta has also joined the race of General Artificial Intelligence (AGI).

This true multimodality greatly enhances the level of machine intelligence and will bring new paradigms to many industries.

For example, the use of robots used to be very limited. They had some sensors and motion capabilities, but generally speaking, they did not have a "brain" to learn new things and could not adapt to unstructured and unfamiliar environments.

Multimodal LLM is expected to change the analytical, reasoning, and learning capabilities of robots, shifting them from specialized to general-purpose. PCs, servers, and smartphones are all outstanding general-purpose computing platforms that can run a variety of software applications to achieve rich and diverse functions. Universalization will help expand the scale, generate economies of scale, and significantly reduce prices as the scale expands, thereby being adopted by more fields and forming a virtuous cycle.

Elon Musk noticed the advantages of universal technology early on, and Tesla's robots have evolved from Bumblebee in 2022 to Optimus Gen 1 announced in March 2023 and Gen 2 by the end of 2023, with their versatility and learning capabilities constantly improving. In the past 6 to 12 months, we have witnessed a series of breakthroughs in the fields of robotics and humanoid robots.

New technologies behind the next generation of robots and embodied intelligence

There is no doubt that we still have a lot of work to do in achieving mass production of embodied intelligence. We need a lighter design, longer running time, and a faster and more powerful edge computing platform to process and fuse sensor data information, so as to make timely decisions and control actions.

And we are moving towards creating humanoid robots. Human civilization has existed for thousands of years, creating ubiquitous environments designed specifically for humans. Due to their similarity in form to humans, humanoid robot systems are expected to be able to interact with humans and the environment with ease and perform necessary operations in the human living environment. These systems will be highly suitable for handling dirty, dangerous, and tedious tasks such as patient care and rehabilitation, service work in the hotel industry, teaching aids or study companions in the education sector, as well as hazardous tasks such as disaster response and hazardous substance handling. This type of application utilizes the attributes of humanoid robots to facilitate natural human-machine interaction, moving in a human centered space and performing tasks that are typically difficult for traditional robots to accomplish.

Many AI and robotics companies have conducted new research and collaboration on how to train robots to better reason and plan in unstructured new environments. As the new "brain" of robots, models pre trained with a large amount of data have excellent generalization ability, enabling robots to understand their environment more comprehensively and adapt their actions based on sensory feedback, optimizing performance in various dynamic environments.

For example, Boston Dynamics' robotic dog Spot can serve as a tour guide in a museum. Spot can interact with visitors, introduce them to various exhibits, and answer their questions. This may be a bit unbelievable, but in this use case, Spot's entertainment, interactivity, and delicate performances are more important than ensuring the facts are correct.

Robotics Transformer: The New Brain of Robots

Robotics Transformer (RT) is rapidly developing and can directly convert multimodal inputs into action encoding. When performing tasks that have been seen before, Google DeepMind's RT-2 performs equally well than the previous generation RT-1, with a success rate close to 100%. However, after training with PaLM-E (a robot oriented embodied multimodal language model) and PaLI-X (a large-scale multilingual vision and language model, not specifically designed for robots), RT-2 has better generalization ability and performs better than RT-1 in tasks that have not been seen before.

Microsoft has launched LLaVA, a big language and visual assistant. LLaVA was originally designed for text-based tasks, utilizing the powerful capabilities of GPT-4 to create a new paradigm of multimodal instruction following data, seamlessly integrating text and visual components, which is very useful for robotic tasks. Once LLaVA was launched, it set a new record for multimodal chat and scientific Q&A tasks, surpassing the average human ability.

As mentioned earlier, Tesla's entry into the field of humanoid robots and AI general robots is of great significance, not only because it is designed for scale and mass production, but also because Tesla's Autopilot, a powerful fully autonomous driving (FSD) technology foundation designed for cars, can be used for robots. Tesla also has intelligent manufacturing use cases that can apply Optimus to the production process of its new energy vehicles.

Arm is the cornerstone of future robotics technology

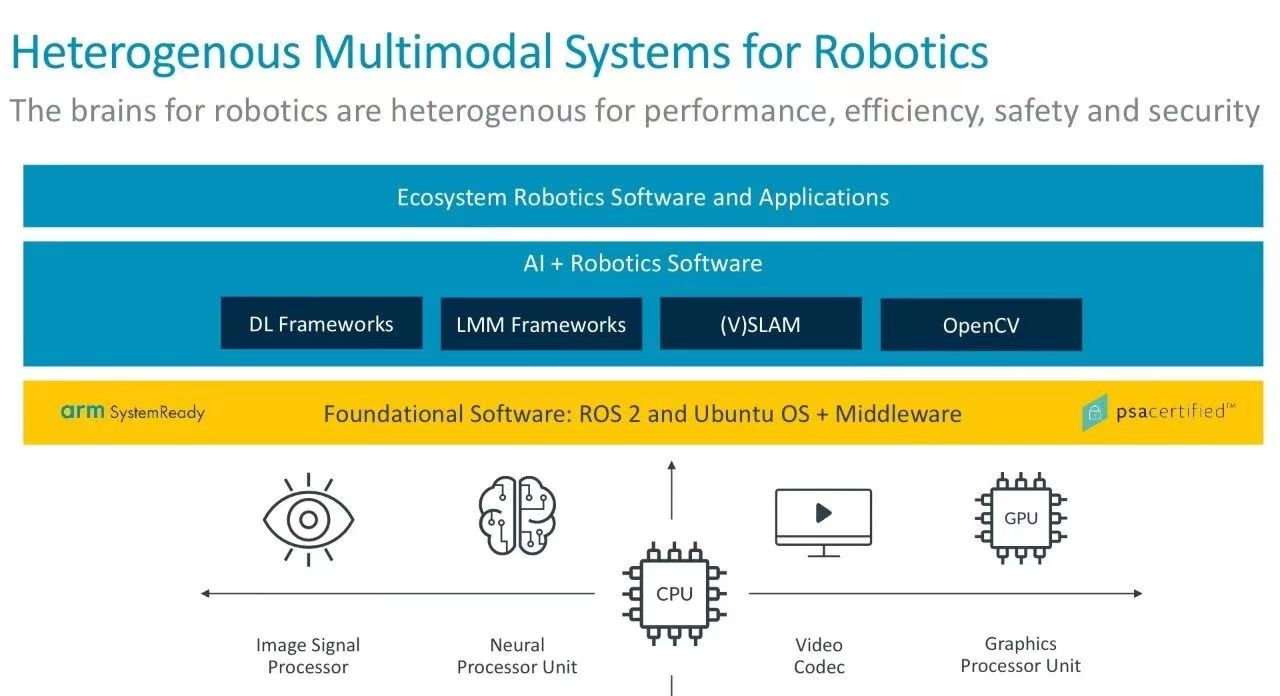

Arm believes that the robot brain, including the "brain" and "cerebellum," should be a heterogeneous AI computing system to provide excellent performance, real-time response, and high energy efficiency.

Robot technology involves a wide range of tasks, including basic calculations (such as sending and receiving signals to motors), advanced data processing (such as image and sensor data interpretation), and running multimodal LLM as mentioned earlier. CPUs are highly suitable for performing general-purpose tasks, while AI accelerators and GPUs can more efficiently handle parallel processing tasks such as machine learning (ML) and graphics processing. Additional accelerators such as image signal processors and video codecs can also be integrated to enhance the robot's visual capabilities and storage/transmission efficiency. In addition, the CPU should have real-time response capability and be able to run operating systems such as Linux and ROS software packages.

When extending to the robot software stack, the operating system layer may also require a real-time operating system (RTOS) that can reliably handle time critical tasks, as well as a Linux distribution tailored for robots, such as ROS, which can provide services designed specifically for heterogeneous computing clusters. We believe that standards and certification programs initiated by Arm, such as SystemReady and PSA Certified, will help expand the scale of robot software development. SystemReady aims to ensure that standard Rich OS distributions can run on various Arm based System on Chip (SoC) architectures, while PSA Certified helps simplify security implementation solutions to meet regional security and regulatory requirements for interconnected devices.

The advancement of large-scale multimodal models and generative AI heralds a new era in the development of AI robots and humanoid robots. In this new era, in order to make robotics technology mainstream, in addition to AI computing and ecosystems, energy efficiency, safety, and functional safety are essential. Arm processors have been widely used in the field of robotics, and we look forward to working closely with the ecosystem to make Arm the cornerstone of future AI robots.This is reported by Top Components, a leading supplier of electronic components in the semiconductor industry

They are committed to providing customers around the world with the most necessary, outdated, licensed, and hard-to-find parts.

Media Relations

Name: John Chen

Email: salesdept@topcomponents.ru